How Artificial Intelligence Will Affect Your Editing Career

(Guest article written by Philip Hodgetts of Intelligent Assistance).

Current State of AI In Filmmaking

When you read a headline like “Artificial Intelligence edits trailer for movie” it’s hard not to worry about how it might affect you, and your career. Well, the news is both good, and a little challenging.

The media likes to hype stories, but the reality is that no software algorithm or Artificial Intelligence (AI) cut a trailer for the movie Morgan. IBM Watson was programmed to find areas of high action, or high emotion from the movie, and make those selects for an experienced (human) trailer editor to create the trailer.

That “AI” pulled selects based on action and emotion is an amazing achievement and an indicator of where “AI” is right now. You may have noticed I put “AI” in air quotes because this is not autonomous AI: true think-like-a-human machines.

Autonomous Artificial General Intelligence – that a ‘machine’ could successfully perform any intellectual task that a human being can – worries the heck out of me ever since I read Tim Urban’s two part article The AI Revolution: The Road to Superintelligence.

We’re not there yet. We may never create a true AI. AlphaGo, Sophia, self driving cars, and to a lesser extend personal assistants like Alexa, Siri, et al. are as close as we get to Artificial General Intelligence.

Learning Machines

But, before you can think you have to learn, and Machine Learning (ML) is booming. Learning Machines aren’t programmed like a traditional computer algorithm. Learning Machines learn from examples and feedback.

In the training phase, many hundreds, or thousands, of examples are provided for the machine (the exact mechanism varies according to data) and the good examples are noted. As the machine learns, it ‘guesses’ the result and tunes those guesses based on successful, or less successful guesses. Ultimately the machine becomes equally good at the task as the trainer(s).

There are readily available Learning Machines available for all comers, but there are ways to benefit from them without even using one.

Whether they are used for finding tax deductions; picking identifying skin cancers from diagnostic images; winning at poker; or calculating insurance payouts these machines have been specifically trained for the task by showing them thousands of examples and providing feedback.

Misha adds: Check out Sungsping; A screenplay written by the learning machine Benjamin. Learn more.

I’ll explore custom ML applications for post production shortly, but we have a lot of benefits already available, right now.

Learning Machines have already been applied to ‘common’ tasks like speech to text, keyword extraction, image recognition, facial recognition, emotion detection, sentiment analysis, etc. and the resulting ‘smart Application Programming Interfaces (APIs)’ are available to anyone who wants to pay the (quite small) fees for use.

Current technology now can:

- Transcribe Speech to text with accuracy equal to a human

- Extract keywords from the transcript

- Recognize images and return keywords describing their content

- Identify faces and link instances of the same face

- Recognize emotion.

These tools are just beginning to be used. We at Lumberjack System plan on adding more of these smart tools this year.

Future NLE technology will:

Misha's rendition of editing in the future.

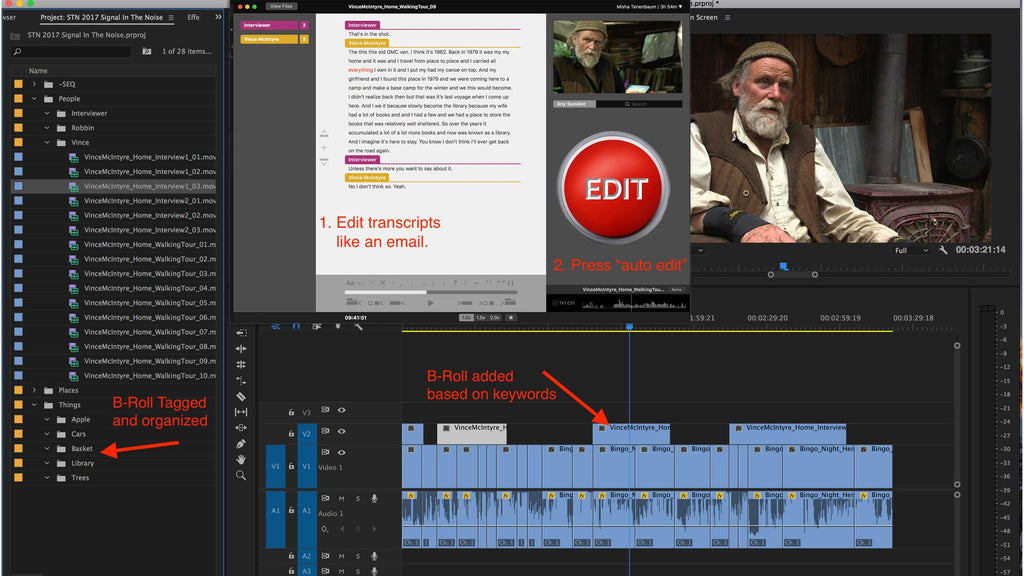

- At the end of a shoot interviews are analyzed and delivered to the editor fully transcribed ready to search by word – a real phrase find instead of one that requires phonetic spellings to get a match.

- Key concepts are derived from the transcript and used to segment and label the transcripts with keywords, that are used to organize the clips. Subject (keyword) based timelines are automatically generated as an editing starting point.

- For scripted production, transcripts would be used to align all takes (or angles) of the same section of script to the script. (Not unlike Avid’s implementation of Nexidia’s technology in ScriptSync, but without Avid’s ScriptSync interface.)

- Highlighted are areas of strong emotion, with each emotion being tagged. (How useful would that be for reality TV?)

- People (talent, actors/characters) are recognized and grouped by face. Faces will need to be identified either by direct entry (once) or by comparison with social media, or a cast list.

- The b-roll is analyzed and identified by shot type (wide, medium, close up) and content, provided as keywords and organized into bins or collections.

All of these features could be implemented now, ready for you to be creative.

Heading into the future we’ll see more machine learning applied to post processes. While a general purpose “edit this for me” machine isn’t coming soon, it won’t be long before machine learning will be able to pull together a highly competent wedding, corporate, education or news video because these types of production, while not exactly formulaic, all follow a fairly strict pattern.

I consider whether this software can be creative in another article, but I believe that AI assisted human creativity is our immediate future. It will certainly make it easier for creative producers/preditors realize their vision.

I am a 2nd year university student at Ravensbourne University and I am writing an essay on Artificial Intelligence and have a few questions. What will the future be for AI in editing? How has AI effected the film industry? Thank you.

Leave a comment